The Future of AI: Is Test-Time Scaling the Next Big Thing?

Nvidia‘s Earnings Report Sparks Debate

Table of Contents

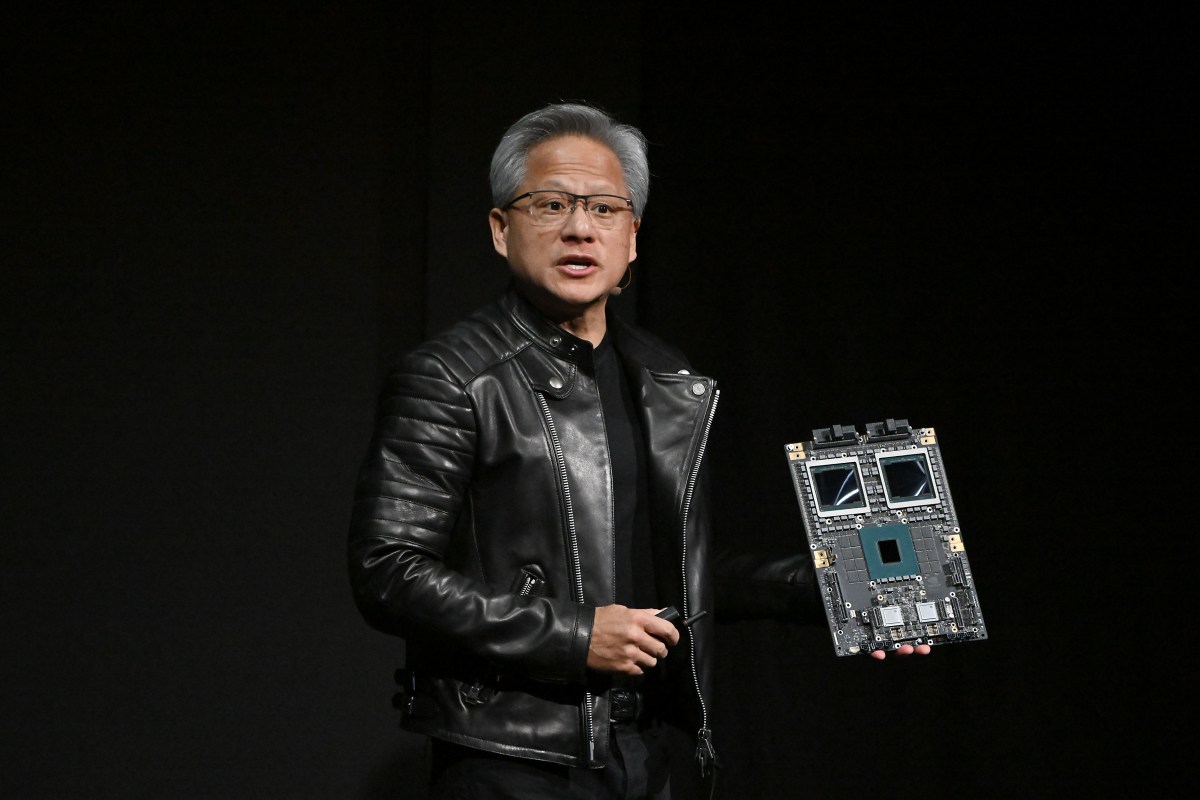

Nvidia recently reported an impressive $19 billion in net income for the last quarter, but investors remain cautious about the company’s future growth. During the earnings call, analysts questioned CEO Jensen Huang about how Nvidia would navigate a potential shift in AI development strategies, particularly towards methods like test-time scaling. This uncertainty highlights the evolving landscape of the AI industry and the need for companies like Nvidia to adapt to new trends.

Test-Time Scaling: A New Frontier in AI Development

Emerging as a game-changer in AI development is the concept of “test-time scaling,” pioneered by OpenAI’s o1 model. This innovative approach involves providing AI models with increased computational resources during the inference phase – the stage where an AI processes user input and generates a response. By allocating more compute power to this stage, AI models can potentially deliver more accurate, nuanced, and insightful responses. Think of it like giving your brain a caffeine boost right when you need to solve a complex problem – it can lead to sharper thinking and better results.

Nvidia Embraces Test-Time Scaling

Huang acknowledged the transformative potential of test-time scaling, calling it “one of the most exciting developments” and “a new scaling law.” He assured investors that Nvidia is well-positioned to capitalize on this trend. This aligns with Microsoft CEO Satya Nadella’s recent statements, who also highlighted o1 as a significant advancement in AI development. Nvidia’s commitment to staying at the forefront of AI innovation suggests they are actively investing in research and development to support test-time scaling.

The Impact on the Chip Industry

This shift towards test-time scaling has profound implications for the chip industry. While Nvidia currently dominates the market for training AI models, startups like Groq and Cerebras are developing specialized chips optimized for AI inference. This could lead to increased competition in this rapidly evolving space, forcing established players like Nvidia to innovate further and potentially diversify their product offerings.

Continued Growth in Model Development

Despite reports suggesting a slowdown in generative model improvements, Huang emphasized that AI developers continue to enhance their models by adding more compute and data during the pretraining phase. Anthropic CEO Dario Amodei echoed this sentiment, stating that “foundation model pretraining scaling is intact and it’s continuing.” This indicates that while test-time scaling offers a new dimension to AI development, traditional methods of model improvement remain crucial.

Nvidia’s Focus on Inference

While most of Nvidia’s current computing workloads revolve around pre-training AI models, Huang believes that the future lies in AI inference. He envisions a world where AI models are widely deployed for various applications, leading to a surge in inference demand. Nvidia’s existing infrastructure and expertise in this area give it a significant advantage over emerging competitors. This strategic focus on inference positions Nvidia as a key player in shaping the future of AI deployment and accessibility.