Nvidia’s AI Revolution: Surpassing Moore’s Law?

The Future of AI: Nvidia’s CEO on Performance, Cost, and the Power of Reasoning

Table of Contents

- The Future of AI: Nvidia’s CEO on Performance, Cost, and the Power of Reasoning

- The Legacy of Moore’s Law and its Limitations

- Nvidia’s AI-Powered Acceleration

- Redefining AI Progress: Beyond Traditional Metrics

- Nvidia’s Position at the Forefront of AI

- The Power of Data: A Cycle of Improvement

- A Legacy of Innovation: 1,000x Improvement in a Decade

- Beyond Traditional Metrics: A New Era of AI Progress

- Nvidia: A Leader in the AI Revolution

- Why Inference Matters: Bringing AI to Life

- The GH200 Advantage: A Game Changer for AI

- Looking Ahead: The Future of AI Powered by Inference

- The Future of AI: Nvidia’s CEO on Performance, Cost, and the Power of Reasoning

- A New Era in AI Development

- Democratizing Access to Powerful AI Technology

- Nvidia’s Response: The GB200 NVL72

- A Leap Forward in AI Inference Performance

- A Long-Term Vision: Driving Down Prices Through Innovation

- The Power of Data: A Virtuous Cycle of Improvement

- Generating High-Quality Data for Enhanced Training

- A Legacy of Innovation: 1,000x Improvement in a Decade

- Surpassing Moore’s Law with Unwavering Commitment

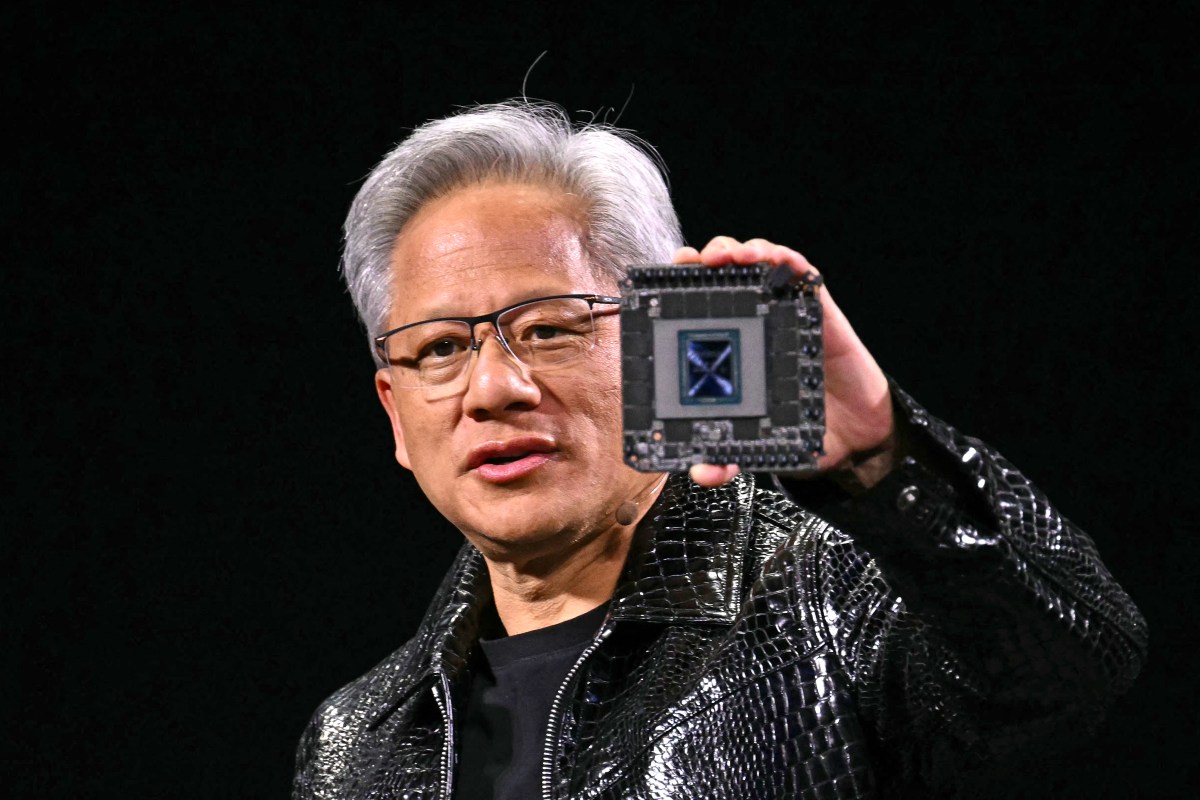

In a groundbreaking announcement that has sent shockwaves through the tech industry, Nvidia CEO Jensen Huang boldly asserts that his company’s AI chips are progressing at a rate far exceeding even the legendary predictions of Moore’s Law. This audacious claim came during Huang’s electrifying keynote address to a packed audience at CES 2025 in Las Vegas, where he unveiled the revolutionary RTX 5090 GPU.

The Legacy of Moore’s Law and its Limitations

Coined by Intel co-founder Gordon Moore in 1965, Moore’s Law posited that the number of transistors on computer chips would double approximately every year, resulting in exponential increases in computing power and a corresponding decrease in costs. This principle fueled decades of rapid technological progress, revolutionizing our world in unimaginable ways. However, recent years have witnessed a slowdown in this relentless pace of advancement. According to a report by TechCrunch, the industry is facing significant challenges in scaling transistor density due to physical limitations and increasing manufacturing costs.

Nvidia’s AI-Powered Acceleration

Nvidia, a leading innovator in the field of artificial intelligence, has emerged as a frontrunner in this new era of technological advancement. The company’s specialized AI chips, known as GPUs (Graphics Processing Units), are designed to handle the massive computational demands of AI algorithms. These powerful processors excel at parallel processing, enabling them to train and run complex AI models with unprecedented speed and efficiency.

Redefining AI Progress: Beyond Traditional Metrics

Nvidia’s CEO, Jensen Huang, argues that traditional metrics like transistor count are no longer sufficient for measuring progress in the realm of AI. He emphasizes the importance of considering factors such as training time, model accuracy, and real-world applications. The company’s focus on developing AI solutions that deliver tangible benefits across diverse industries, from healthcare to finance, underscores this shift in perspective.

Nvidia’s Position at the Forefront of AI

Nvidia has solidified its position as a dominant force in the AI landscape through strategic partnerships, acquisitions, and continuous innovation. The company’s CUDA platform, a parallel computing architecture specifically designed for GPUs, has become the industry standard for AI development. Furthermore, Nvidia’s investment in research and development has yielded groundbreaking advancements in areas such as deep learning, natural language processing, and computer vision.

The Power of Data: A Cycle of Improvement

AI models thrive on data, and Nvidia recognizes the crucial role of data infrastructure in driving progress. The company’s efforts to develop high-performance computing platforms and storage solutions enable researchers and developers to handle massive datasets efficiently. This continuous cycle of data acquisition, analysis, and model refinement fuels ongoing advancements in AI capabilities.

A Legacy of Innovation: 1,000x Improvement in a Decade

Nvidia’s journey has been marked by a relentless pursuit of innovation. Over the past decade, the company has witnessed an astounding 1,000x improvement in AI performance, according to Huang. This remarkable progress underscores Nvidia’s commitment to pushing the boundaries of what’s possible and shaping the future of technology.

The Future of AI: How Nvidia’s GH200 is Redefining Performance

Nvidia CEO Jensen Huang boldly asserts that his company’s AI chips are transcending the limitations of Moore’s Law, driven by a unique synergy of hardware, software, and algorithms. This integrated approach allows Nvidia to simultaneously develop and optimize all components, fostering an unprecedented level of innovation. The company proudly proclaims that its latest datacenter superchip, the GH200, is over 30 times faster than its predecessor for running AI inference workloads, a testament to this remarkable progress.

“We can build the architecture, the chip, the system, the libraries, and the algorithms all at the same time,” Huang stated. “If you do that, then you can move faster than Moore’s Law because you can innovate across the entire stack.”

Beyond Traditional Metrics: A New Era of AI Progress

Huang challenges the conventional wisdom that AI progress has stagnated, proposing a novel framework based on three distinct scaling laws: pre-training, post-training, and test-time compute. Each stage plays a pivotal role in enhancing AI model capabilities, and Nvidia’s advancements across all three areas contribute to its claim of surpassing Moore’s Law.

Furthermore, Huang asserts that the cost of inference will decrease as performance increases, echoing the historical impact of Moore’s Law on computing costs. This suggests that Nvidia’s AI chips could democratize access to powerful AI capabilities, making them more widely available for diverse applications. This trend aligns with our vision at TheTrendyType of making cutting-edge technology accessible to everyone.

Nvidia: A Leader in the AI Revolution

As the leading provider of AI chips for industry giants like Google, OpenAI, and Anthropic, Nvidia is undeniably positioned at the forefront of this transformative technology. Its unwavering commitment to research and development, coupled with its ambitious vision for the future of AI, solidifies its position as a key driver of innovation in this rapidly evolving field.

Nvidia’s success in capturing the imagination of investors is evident in its recent surge to become the most valuable company on Earth. This remarkable achievement underscores the immense potential of AI and Nvidia’s role in shaping its future.

The Rise of Inference: Nvidia’s GH200 Superchip Takes Center Stage

While Nvidia’s H100 GPUs dominated the AI training arena, powering groundbreaking models, the landscape is evolving. As companies prioritize deploying these trained models for real-world applications – a process known as inference – a new champion emerges: Nvidia’s GH200 Grace Hopper Superchip.

This revolutionary system seamlessly integrates Nvidia’s latest Grace CPU with its H100 GPU, creating a unified powerhouse optimized for both training and inference. This dual-core architecture accelerates processing speeds and enhances efficiency, making it ideal for handling the massive data demands of modern AI applications.

Why Inference Matters: Bringing AI to Life

Inference is the crucial step that transforms trained AI models into tangible solutions. It involves using these models to make predictions or decisions on new data, bringing AI to life in diverse applications. From chatbots and recommendation systems to self-driving cars and medical diagnosis tools, inference powers the real-world impact of AI.

As AI adoption surges, the demand for efficient inference solutions skyrockets. Companies need to deploy models quickly and cost-effectively at scale, a challenge that the GH200 addresses head-on.

The GH200 Advantage: A Game Changer for AI

Here’s what sets the GH200 apart:

Unified Architecture: The integration of CPU and GPU on a single chip eliminates data transfer bottlenecks, resulting in significant performance gains. This streamlined design allows for faster processing and reduced latency, crucial for real-time applications.

High Bandwidth Memory (HBM3): The GH200 boasts massive amounts of high-speed memory, enabling it to process vast datasets with ease. This high bandwidth memory ensures that data is readily available to the CPU and GPU, accelerating computations and improving overall performance.

These features make the GH200 a game-changer for companies seeking to build and deploy powerful AI applications at scale.

Looking Ahead: The Future of AI Powered by Inference

The shift towards inference is reshaping the AI landscape. Nvidia’s GH200 Superchip is poised to become the go-to solution for businesses harnessing the power of AI in real-world applications. With its unmatched performance and efficiency, the GH200 paves the way for a future where AI seamlessly integrates into every aspect of our lives.

The Future of AI: Nvidia’s CEO on Performance, Cost, and the Power of Reasoning

In the rapidly evolving world of artificial intelligence, making powerful AI models more accessible and affordable is paramount. Nvidia CEO Jensen Huang recently addressed this challenge, emphasizing his company’s commitment to pushing the boundaries of performance and driving down costs through innovative hardware solutions.

Huang highlighted the growing trend of “test-time compute,” where significant computational resources are required during the inference phase of AI models. This approach, exemplified by OpenAI’s groundbreaking o3 model, can lead to high operational costs. For instance, OpenAI’s o3 model requires substantial computational power during inference, highlighting the need for efficient solutions like the GH200.

Huang emphasized that Nvidia is dedicated to developing hardware that not only delivers exceptional performance but also reduces the cost of running AI models. This commitment aligns with the broader goal of making AI more accessible to a wider range of developers and businesses.

A New Era in AI Development

Nvidia’s focus on both performance and affordability signals a new era in AI development. By providing powerful hardware solutions like the GH200, Nvidia is empowering developers to build innovative applications that can solve real-world problems while keeping costs manageable. This shift towards accessible and efficient AI has the potential to revolutionize industries and drive progress across various sectors.

The Future of AI: Nvidia’s Vision for a Data-Driven World

Democratizing Access to Powerful AI Technology

OpenAI’s recent research has shed light on the escalating costs associated with training advanced AI models. Achieving human-level performance with models like o3 requires a substantial financial investment, reaching nearly $20 per task – a figure that surpasses even the monthly cost of a ChatGPT Plus subscription. This raises crucial questions about the accessibility and affordability of cutting-edge AI technology.

Nvidia’s Response: The GB200 NVL72

A Leap Forward in AI Inference Performance

To address these challenges, Nvidia has unveiled its latest datacenter superchip, the GB200 NVL72. This powerful processor boasts a performance advantage of 30 to 40x over its predecessor, the H100, making it significantly more efficient at handling AI inference workloads. This leap in performance promises to translate into lower costs for users of AI reasoning models like o3, paving the way for wider adoption and innovation.

A Long-Term Vision: Driving Down Prices Through Innovation

Nvidia’s CEO, Jensen Huang, envisions a future where increasingly performant chips drive down prices over time through technological advancements. This approach aligns with Nvidia’s history of innovation and its commitment to democratizing access to powerful AI technology. By making AI more affordable and accessible, Nvidia aims to empower individuals and organizations to harness the transformative potential of this groundbreaking field.

The Power of Data: A Virtuous Cycle of Improvement

Generating High-Quality Data for Enhanced Training

Huang believes that AI reasoning models can be used to generate high-quality data, further enhancing the training and refinement of AI models. This creates a virtuous cycle where improved performance leads to better data, which in turn fuels even greater advancements in AI capabilities. This self-reinforcing loop has the potential to accelerate progress in AI development at an unprecedented pace.

A Legacy of Innovation: 1,000x Improvement in a Decade

Surpassing Moore’s Law with Unwavering Commitment

Nvidia’s CEO proudly asserts that the company’s AI chips have achieved a staggering 1,000x improvement in performance over the past decade. This remarkable progress surpasses even the pace predicted by Moore’s Law, underscoring Nvidia’s dedication to pushing the boundaries of what’s possible in AI. This unwavering commitment to innovation has positioned Nvidia as a leader in the field, shaping the future of this transformative technology.